As you may already know, AI companies love throwing around big numbers: billions of tokens per minute, trillions per month, etc. But how does that actually compare to us? How much does humanity speak?

Let's find out.

First: what's a token?

AI models don't read words like we do. They break text into smaller chunks called tokens: sometimes a full word, sometimes just a piece of one. Kinda like syllables. How text is split into tokens also depends on the model.

The rough conversion: 1000 tokens ≈ 750 words.

Estimating human speech (in tokens)

The goal here is just to get an order of magnitude. Nothing precise, just coherent enough to get a rough idea.

Words spoken per person per day: ~15,000 (decent global average)

World population: ~8 billion

Total words per year:

\[W_{\text{yr}} \approx (8 \times 10^9) \times (1.5 \times 10^4) \times 365 \approx 4.4 \times 10^{16}\]

Converting to tokens (dividing by 0.75):

\[T_{\text{yr}} \approx 6 \times 10^{16} \text{ tokens/year}\]

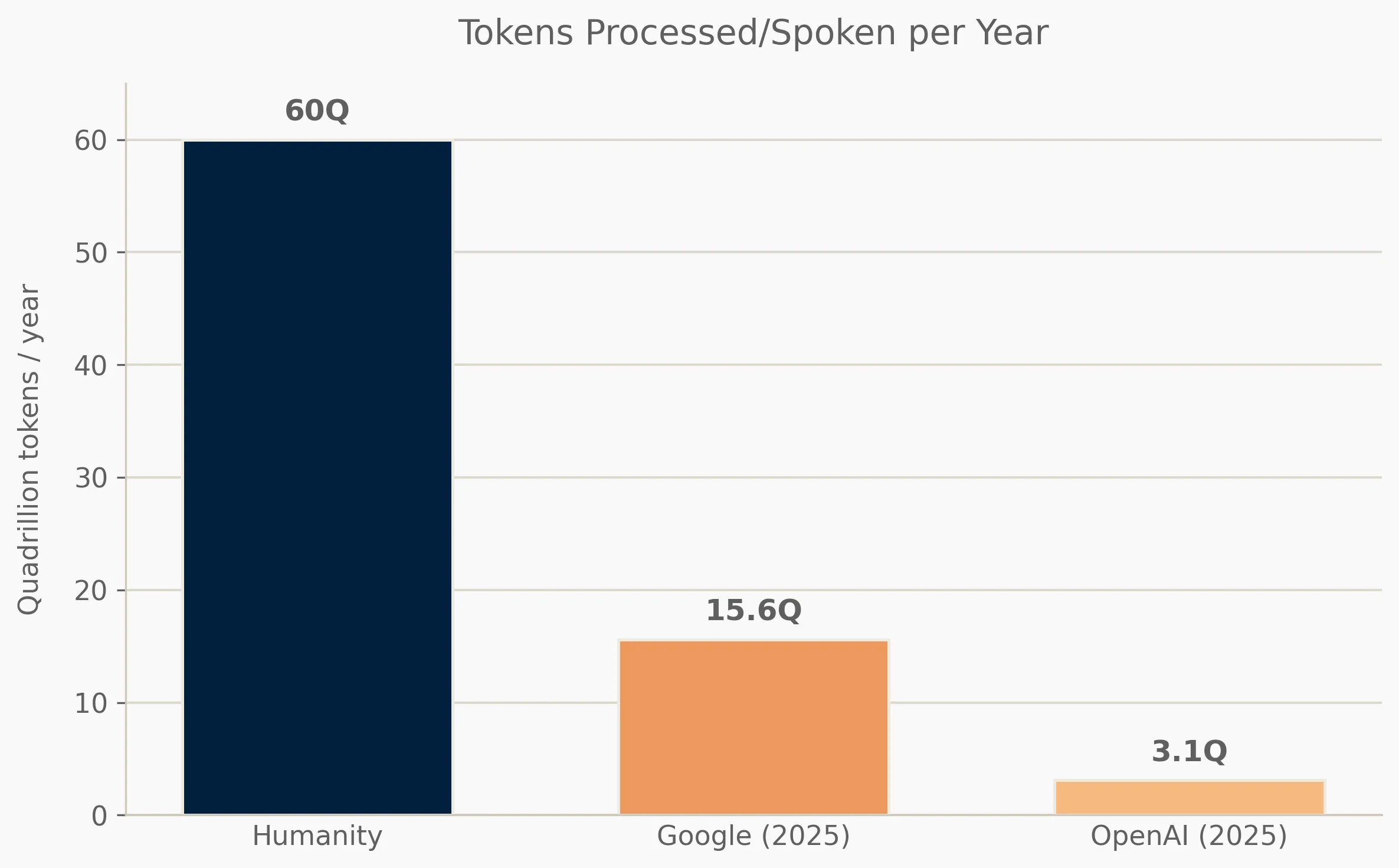

That's ~60 quadrillion tokens per year. Sixty million billion!

Now: how does AI compare?

Numbers from the biggest labs, late 2025:

OpenAI (Dev Day in October 2025): processing over 6 billion tokens/minute → 3.1 quadrillion tokens/year → ~5% of human speech.

Google (CEO earnings remarks from October 2025): over 1.3 quadrillion tokens/month → 15.6 quadrillion tokens/year → ~26% of human speech.

Just two companies are now processing the equivalent of one-third of all words spoken by humanity.

We tend to think of "human scale" as some unreachable ceiling. It's not. AI is getting close to out-talking us; and these numbers are already hard to wrap your head around. Curious to see where this goes.